BIZTECH NEWS

YouTube clips can act like ‘vaccine’ against viral misinformation, large trial suggests

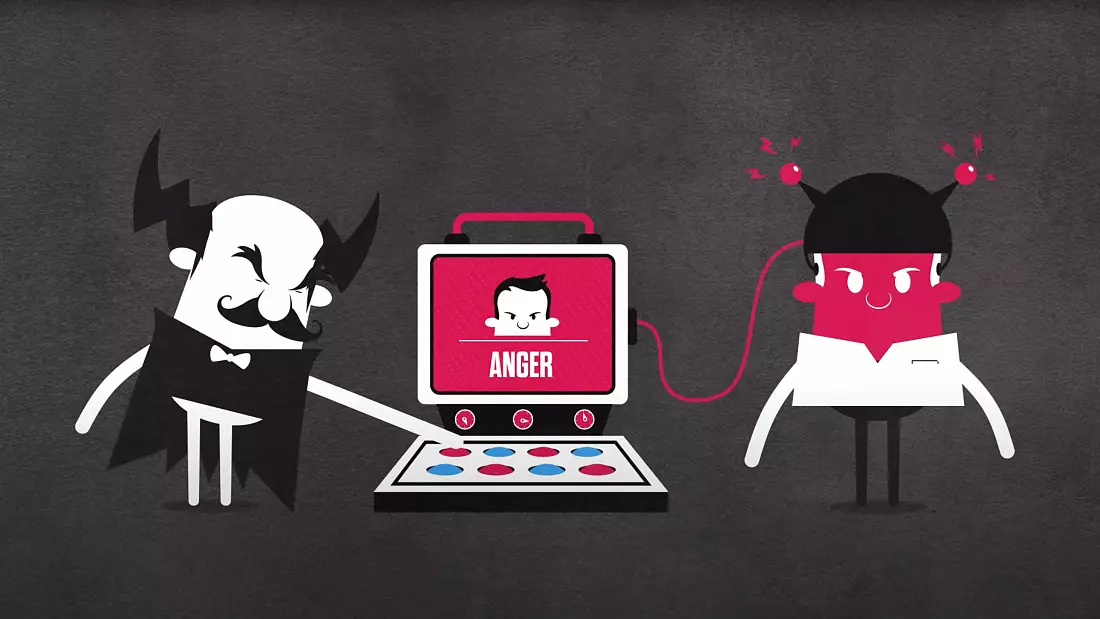

A still from one of the videos which “inoculate” viewers against misinformation.

A still from one of the videos which “inoculate” viewers against misinformation. – Copyright Inoculation Science Project

By Luke Hurst • Updated: 24/08/2022 – 20:01

People can be “vaccinated” and at least partially protected from misinformation online, according to a new study, which found familiarising people with common manipulation tactics helped them spot harmful content.

By explaining these tactics using short cartoons played during YouTube advertisement slots, the researchers were able to “inoculate” users against misinformation.

Researchers behind the Inoculation Science project compare it to a vaccine: by giving people a “micro-dose” of misinformation in advance, it helps prevent them from falling for it in the future.

Described by the study authors as “psychological inoculation,” the technique was found to be consistently effective on users from across the political spectrum.

How will Facebook, Twitter and TikTok manage misinformation before the US midterm elections?

The trial on YouTube, which has more than 2 billion active users around the world, involved the first “real world field study” of inoculation theory on a social media platform.

And the authors claim the short cartoons avoid biases people may have about where information is from, and if it is true or not. Rather they gave general advice about how to spot potential misinformation.

“Our interventions make no claims about what is true or a fact, which is often disputed,” said lead author Dr Jon Roozenbeek, from Cambridge University’s Social Decision-Making Lab.

“They are effective for anyone who does not appreciate being manipulated”.

He added: “The inoculation effect was consistent across liberals and conservatives. It worked for people with different levels of education, and different personality types. This is the basis of a general inoculation against misinformation”.

Ukraine war misinformation from Russia Today is slipping through the cracks of an EU ban. But how?

‘Prebunking’ misinformation

Working with a team within Google called Jigsaw, which the company says works on tackling threats to open societies, psychologists from the universities of Cambridge and Bristol made 90-second animated clips familiarising people with common misinformation techniques.

These include the use of emotional language, purposeful incoherence, false dichotomies, scapegoating, and ad-hominem attacks.

The idea is to “prebunk” misinformation before it is consumed by viewers, rather than debunking misinformation after it has already spread.

The authors argue debunking is impossible to do at scale, and prebunking may be more effective.

“YouTube has well over 2 billion active users worldwide. Our videos could easily be embedded within the ad space on YouTube to prebunk misinformation,” said study co-author Professor Sander van der Linden, Head of the Social Decision-Making Lab.

“Our research provides the necessary proof of concept that the principle of psychological inoculation can readily be scaled across hundreds of millions of users worldwide”.

Ukraine war: Five of the most viral misinformation posts and false claims since the conflict began

The trial was composed of seven experiments, involving almost 30,000 participants.

Six initial controlled experiments featured 6,464 participants, with the sixth experiment conducted a year after the first five, to ensure earlier findings could be replicated.

Data about each participant was collected, including basic demographic information, but also levels of numeracy, conspiratorial thinking, interest in news, and others.

They found that the inoculation videos improved people’s ability to spot misinformation, and boosted their confidence in being able to do so again.

Two of the videos were then tested as part of a big experiment on YouTube in advert slots.

Around 5.4 million people in the US saw the videos, with almost a million watching for at least 30 seconds. Some of those users were then asked to complete a voluntary test question.

Binance executive says scammers created deepfake ‘hologram’ of him to trick crypto developers

‘Manipulation tactics can be predicted’

“Harmful misinformation takes many forms, but the manipulative tactics and narratives are often repeated and can therefore be predicted,” said Beth Goldberg, co-author and Head of Research and Development for Google’s Jigsaw unit.

“Teaching people about techniques like ad-hominem attacks that set out to manipulate them can help build resilience to believing and spreading misinformation in the future.

“We’ve shown that video ads as a delivery method of prebunking messages can be used to reach millions of people, potentially before harmful narratives take hold,” Goldberg said.

The ability to recognise manipulation techniques at the heart of misinformation increased by 5 per cent on average among participants in the experiments.

“Users participated in tests around 18 hours on average after watching the videos, so the inoculation appears to have stuck,” said van der Linden.

Researchers say that scaling up this concept across social media platforms could have wide-ranging effects on diminishing the impact of misinformation.

Google says it’s already acting on the findings, and that its Jigsaw team will roll out a prebunking campaign across platforms in Poland, Slovakia, and the Czech Republic at the end of August to get ahead of emerging disinformation relating to Ukrainian refugees.